what can a researcher do to influence the size of the standard error

How is Sample Size Related to Standard Error, Power, Confidence Level, and Result Size?

Using graphs to demonstrate the correlations

When conducting statistical analysis, particularly during experimental pattern, i practical issue that one cannot avoid is to determine the sample size for the experiment. For example, when designing the layout of a spider web page, nosotros want to know whether increasing the size of the click push button will increase the click-through probability. In this example, AB testing is an experimental method that is ordinarily used to solve this trouble.

Moving to the details of this experiment, you will beginning decide how many users I will need to assign to the experiment group, and how many we need for the control grouping. The sample size is closely related to four variables, standard mistake of the sample, statistical power, confidence level, and the effect size of this experiment.

In this article, we will demonstrate their relationships with the sample size by graphs. Specifically, we will discuss different scenarios with one-tail hypothesis testing.

Standard Fault and Sample Size

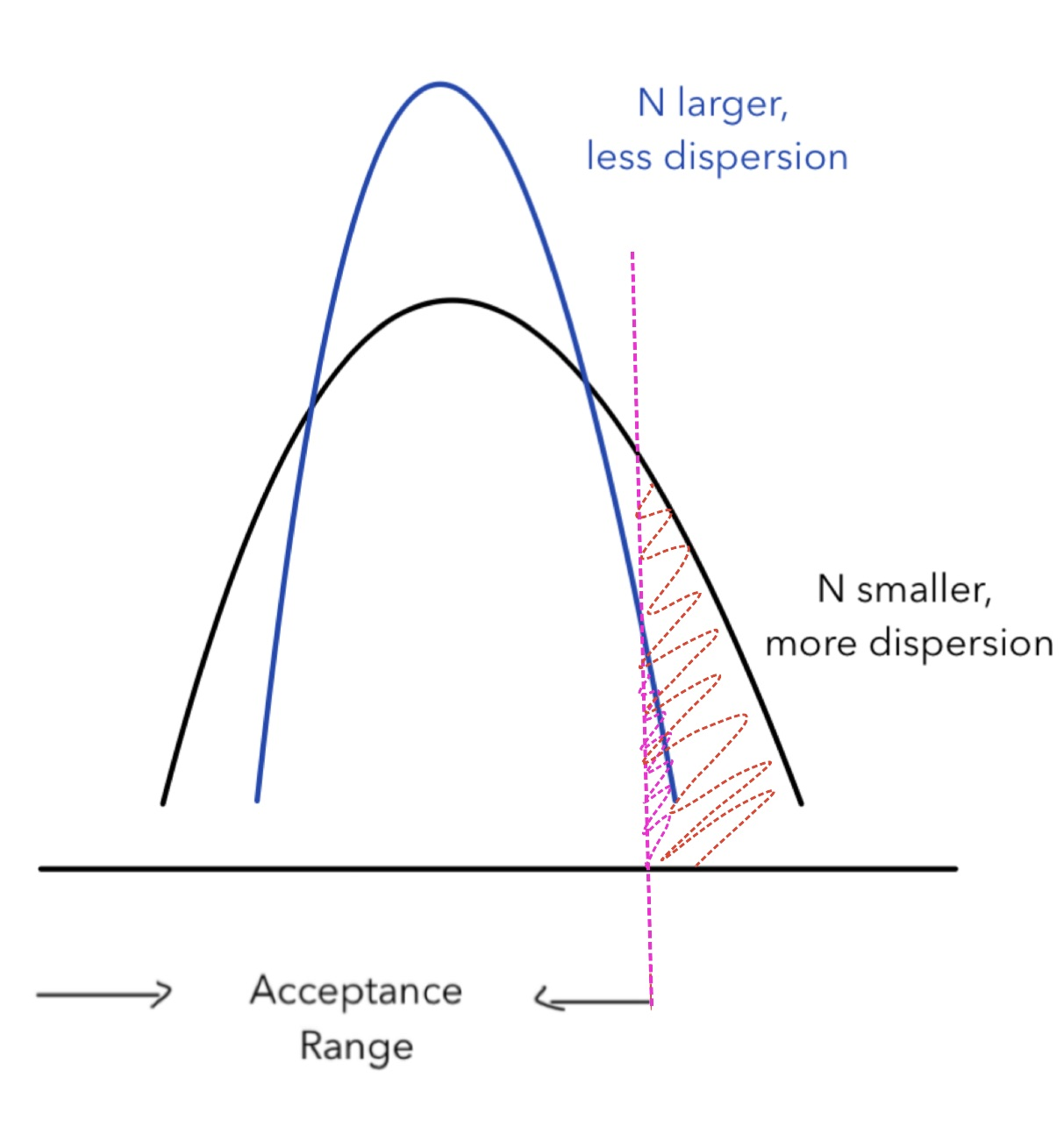

The standard error of a statistic corresponds with the standard deviation of a parameter. Since it is near impossible to know the population distribution in most cases, we tin estimate the standard difference of a parameter by calculating the standard fault of a sampling distribution. The standard error measures the dispersion of the distribution. As the sample size gets larger, the dispersion gets smaller, and the mean of the distribution is closer to the population mean (Key Limit Theory). Thus, the sample size is negatively correlated with the standard fault of a sample. The graph below shows how distributions shape differently with different sample sizes:

As the sample size gets larger, the sampling distribution has less dispersion and is more centered in by the mean of the distribution, whereas the flatter curve indicates a distribution with higher dispersion since the data points are scattered across all values.

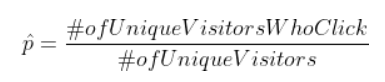

Understanding the negative correlation betwixt sample size and standard fault help conduct the experiment. In the experiment design, it is essential to constantly monitor the standard error to see if we need to increase the sample size. For example, in our previous case, we want to run into whether increasing the size of the bottom increases the click-through rate. The target value nosotros need to measure in both the control group and the experiment group is the click-through rate, and it is a proportion calculated as:

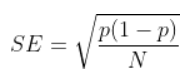

the standard mistake for a proportion statistic is:

The standard error is at the highest when the proportion is at 0.5. When conducting the experiment, if observing p getting shut to 0.5(or ane-p getting close to 0.five), the standard fault is increasing. To maintain the same standard fault, we demand to increment Due north, which is the sample size, to reduce the standard error to its original level.

Statistical Power and Sample Size

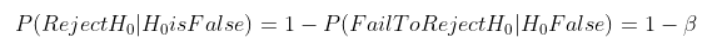

Statistical power is also chosen sensitivity. It is calculated by 1- β, where β is the Type II error. Higher power ways you are less likely to make a Type Ii fault, which is failing to reject the null hypothesis when the null hypothesis is false. As stated here:

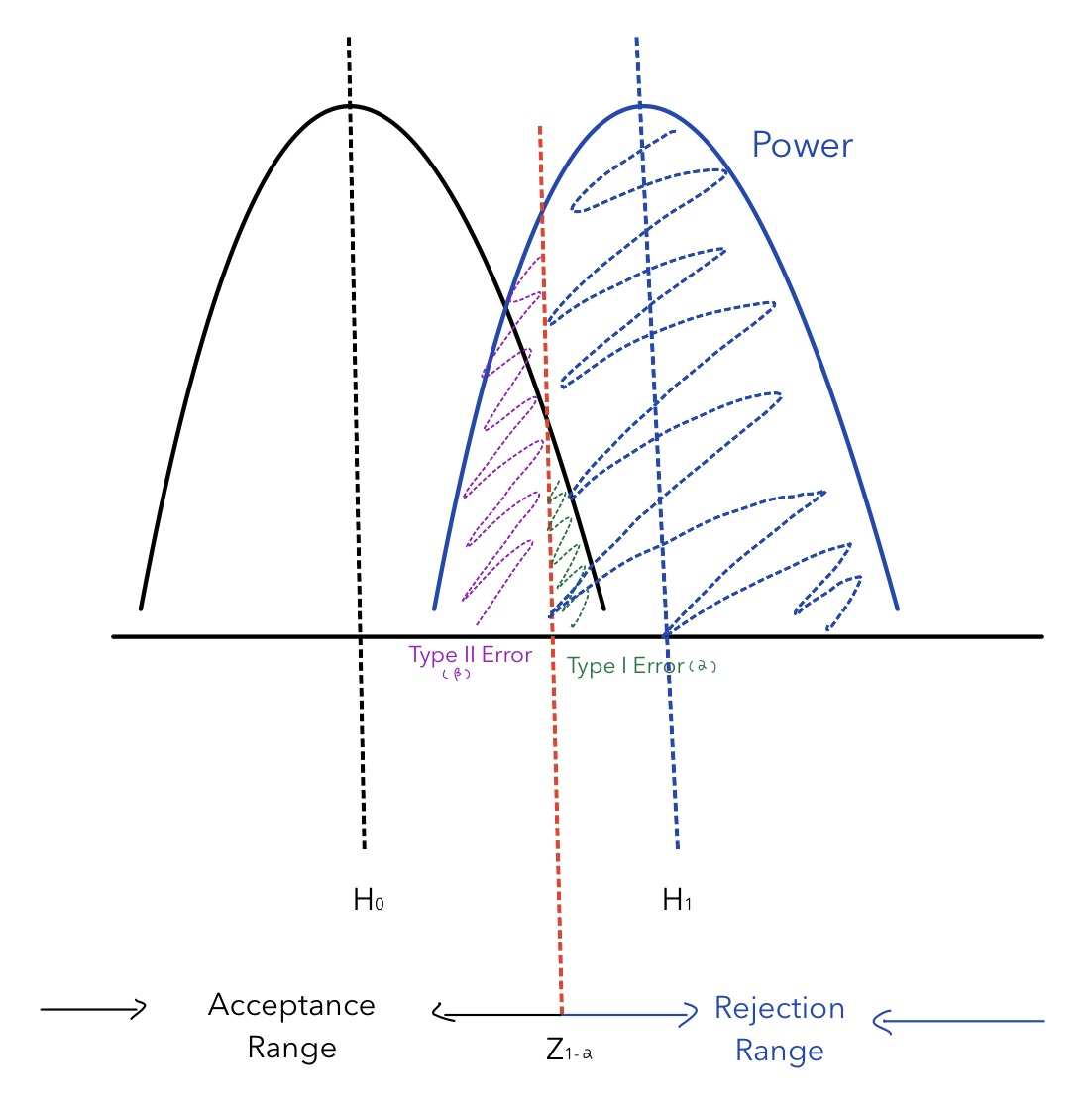

In other words, when reject region increases (acceptance range decreases), it is probable to refuse. Thus, Type I error increases while Type 2 mistake decreases. The graph below plots the relationship among statistical power, Type I error (α) and Type II error (β) for a ane-tail hypothesis testing. After choosing a confidence level (1-α), the blue shaded expanse is the size of power for this detail analysis.

From the graph, it is obvious that statistical ability (1- β) is closely related to Type II fault (β). When β decreases, statistical power (one- β) increases. Statistical power is also affected to Type I fault (α), when α increases, β decreases, statistical power (1- β) increases.

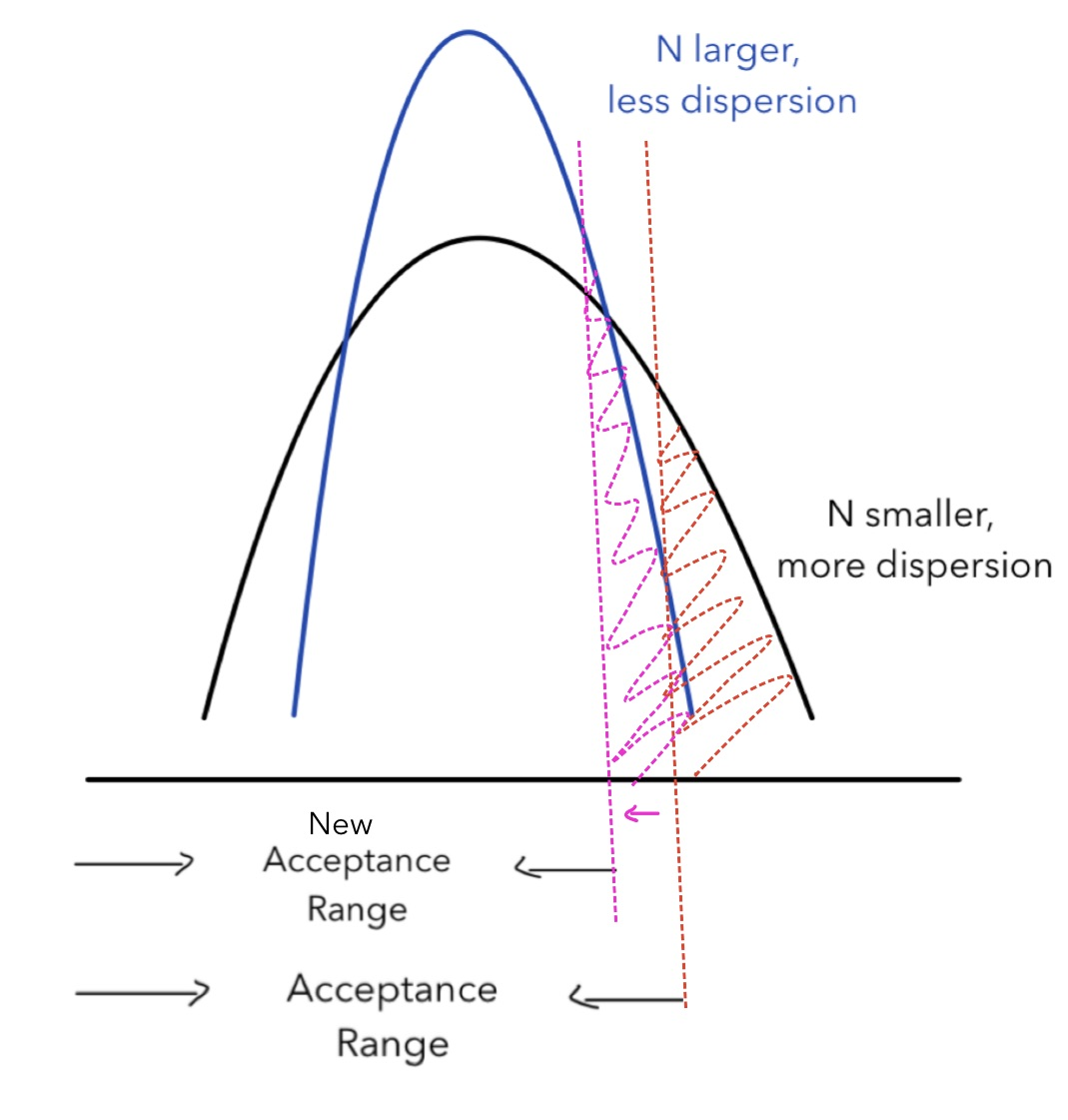

The red line in the middle decides the tradeoff between the acceptance range and the rejection range, which determines the statistical power. How does the sample size affect the statistical ability? To answer this question, we need to alter the sample size and see how statistical ability changes. Since Type I mistake also changes corresponded with the sample size, we need to concur it abiding to uncover the human relationship betwixt the sample size and the statistical power. The graph below illustrates their relationship:

When the sample size increases, the distribution will be more full-bodied around the mean. To hold Blazon I error abiding, we need to decrease the critical value (indicated by the ruby and pink vertical line). As a outcome, the new acceptance range is smaller. Equally stated above, when it is less likely to accept, it is more likely to reject, and thus increases statistical ability. The graph illustrates that statistical power and sample size accept a positive correlation with each other. When the experiment requires higher statistical power, yous demand to increase the sample size.

Conviction Level and Sample Size

As stated above, the confidence level (1- α) is likewise closely related to the sample size, as shown in the graph beneath:

Equally the acceptance range keeps unchanged for both blueish and blackness distributions, the statistical ability remains unchanged. Every bit the sample size gets larger (from blackness to blueish), the Type I mistake (from the red shade to the pink shade) gets smaller. For one-tail hypothesis testing, when Type I error decreases, the conviction level (1-α) increases. Thus, the sample size and confidence level are besides positively correlated with each other.

Issue Size and Sample Size

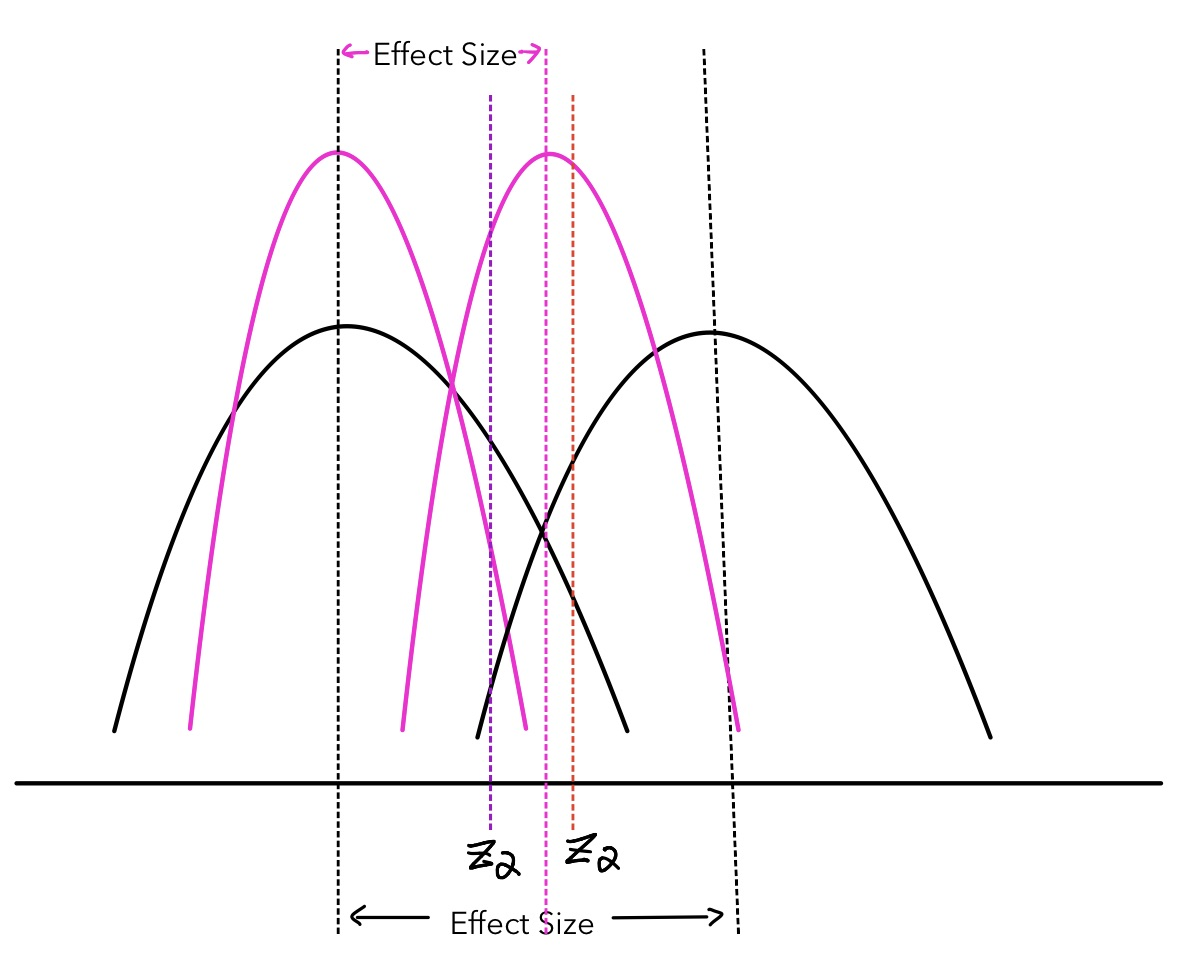

The result size is the applied significant level of an experiment. Information technology is set up by the experiment designer based on practical situations. For instance, when we desire to check whether increasing the size of the bottom in the webpage increases the click-through probabilities, nosotros need to ascertain how much of a difference we are measuring between the experiment grouping and the control group is practically meaning. Is a 0.1 deviation significant enough to attract new customers or generate significant economic profits? This is the question the experiment designer has to consider. Once the effect size is gear up, nosotros can use it to decide the sample size, and their relationship is demonstrated in the graph below:

Every bit the sample size increases, the distribution get more pointy (black curves to pink curves. To go along the conviction level the aforementioned, we need to move the disquisitional value to the left (from the red vertical line to the royal vertical line). If we do not move the alternative hypothesis distribution, the statistical power will decrease. To maintain the constant ability, we need to motion the alternative hypothesis distribution to the left, thus the effective upshot decreases as sample size increases. Their correlation is negative.

How to interpret the correlations discussed above?

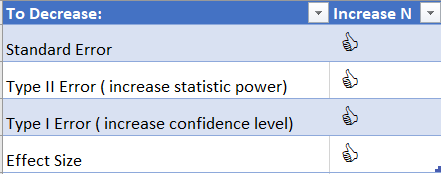

In summary, we accept the following correlations betwixt the sample size and other variables:

To interpret, or better memorizing the human relationship, we can see that when we need to reduce errors, for both Type I and Type 2 error, we need to increase the sample size. A larger sample size makes the sample a better representative for the population, and it is a better sample to use for statistical analysis. As the sample size gets larger, information technology is easier to notice the difference between the experiment and control group, even though the difference is smaller.

How to summate the sample size given other variables?

In that location are many ways to calculate the sample size, and a lot of programming languages have the packages to calculate it for you. For example, the pwr() bundle in R tin can exercise the work. Compared to knowing the exact formula, it is more important to empathize the relationships behind the formula. Promise this article helps you sympathize the relationships. Cheers for reading!

Here is the list of all my blog posts. Cheque them out if you are interested!

Source: https://towardsdatascience.com/how-is-sample-size-related-to-standard-error-power-confidence-level-and-effect-size-c8ee8d904d9c